Google Kubernetes Engine

Hosted Kubernetes cluster services, with nodes deployed over "Compute Engine" instances.

It is a fully managed Kubernetes solution.

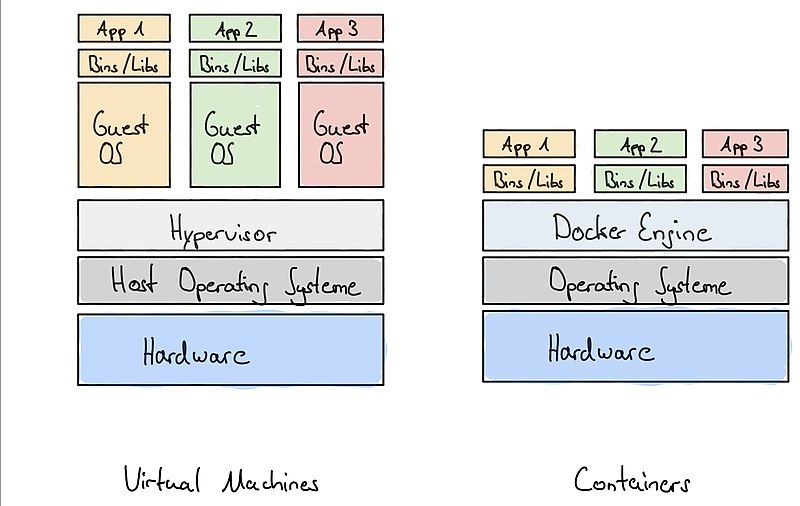

Containers

Unlike VMs, containers share the Host Kernel. However, every image can have a different operating system as far as it is based on same kernel.

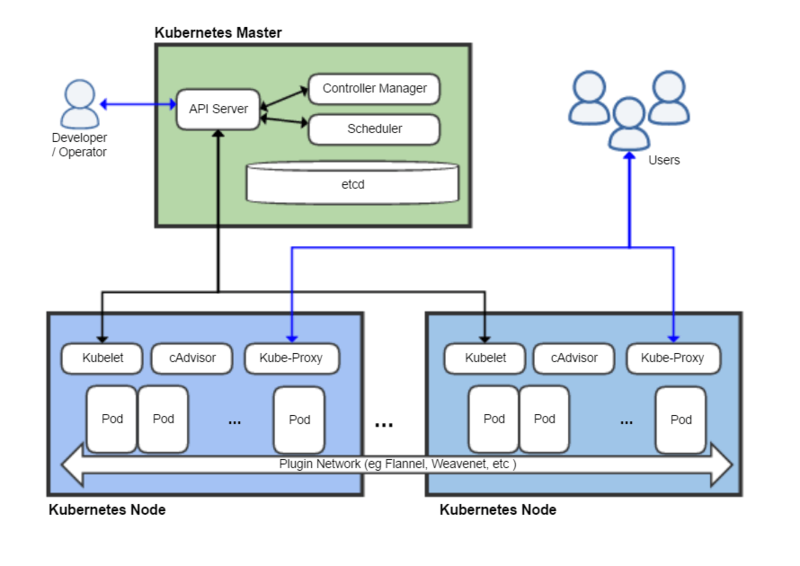

Kubernetes

Containers orchestrators like kubernetes, manage containers, their lifecycles, their connectivity and more.

Google Kubernetes Engine is based on the open source Kubernetes, and it adds some additional features.

Clusters

A cluster consists of at least one cluster master and multiple worker machines called nodes.

These master and node machines run the Kubernetes cluster orchestration system.

Cluster master runs kubernetes control plane processes.

Cluster location can be a either zone or a region.

Nodes

Nodes can be defined individually or in pools. GKE offers a fully managed node experience:

- Provides a base image and updates to base image.

- Provides node auto repair.

- Provides node auto upgrade.

- Node pools

Node images

- Container-Optimized OS (google, based in Linux 4.4)

- Containerd on Container-Optimized OSin such case, Docker uses containerd as runtime engine.

- Ubuntu

- Containerd on Unbuntuin such case, Docker uses containerd as runtime engine.

- Windows Server

Image can be changed, on editing the node pool, but this action deletes and recreates all pods.

Node pools

- Pools of nodes with similar resources, like instance group.

- They support custom machine types, preemptible VMs, GPUs and local SSDs.

- Specific kubernetes version or node image type can be defined.

- Node pools can auto scale.

- They can be specific to one particular zone.

- Multizone/regional clusters: node pools can be in different zones. Note, that when node pools are placed within multizone container cluster, Google Kubernetes Engine replicate all pools along all cluster (watch for quota).

In addition, in terms of security, user can define the level of access of nodes to Google Cloud APIs.

Node auto-upgrade

Google Cloud automatically updates Kubernetes version on nodes, applying security updates of Kubernetes and OS.

User can define maintenance windows.

On creating the cluster, you can specify to either use the release channel, which auto upgrades it or a specific kubernetes version.

Node auto-repair

GKE checks for unreachable nodes, nodes reporting a NotReady status or low disk space.

Unhealthy nodes (failing health checks over 10 minutes or running out of space on boot disk) have their pods drained and node is recreated.

Cluster auto-scaling

User can set vertical-pod autoscaling, and node auto-provisioning.

That means, that master autoscales the number of nodes within the Node Pools depending on Pods and workload, moving pods from one node to others.

Cluster autoscaler causse brief disruptions on restarting pods on different nodes, so if services are not disruption tolerant, autoscaling is not recommended.

Autoscaler overrides any manual node management operation performed by user.

All nodes in a single node pool have the same set of labels.

It shouldn't be used with large clusters (more than 100 nodes).

Cluster networking

Cluster can be private or public (nodes, including masters have public addresses).

Clusters can either use VPC native or be configured with specific pod address ranges, service addres ranges,

Cluster security

Container Engine user can also authenticate on Kubernetes API on their cluster using Google Oauth2 access tokens.

On creating a cluster, Google Container Engine configures kubectl to use Application Default Credentials to authenticate to the cluster.

Container Role-Based Acces Control (RBAC) can be setup to be integrated with Google Groups in G Suite IAM.

Application Layer Secrets Encryption: capacity to store encrypted Kubernetes Secrets in ETCD, and be managed from Google Cloud KMS

Legacy options like client certificates (difficult to revoke) and HTTP basic authentication.

Other Cluster features

Istio and GKE usage metering (up to Kubernetes labels level).

Alpha clusters

Short-lived cluster which is not covered by the Google Container Engine SLA and cannot be upgraded, but it has got all APIs and features enabled.

This feature is clickable after disabling auto repair and auto upgrade in the node pools.

They are automatically deleted after 30 days.

Anthos

Anthos is a Service Mesh which allows you to interconnect your GKE with a Kubernetes cluster, deployed in an On-Prem Data Center, through Istio

Networks are connected with Cloud Interconnect.

Applications

Kubernetes Applications collect containers, services and configuration that are managed together.

GKE allows for 1-step deployment of applications from Google Marketplace, as well as from the full gcloud command line.

Services

A Kubernetes Service is an abstraction which defines a logical set of Pods and a policy by which to access them.

Exposing a Pod in Google Cloud Console, allows user to create a service.

Service types

- ClusterIP: it exposes the Service on a cluster-internal IP. Choosing this value makes the Service only reachable from within the cluster. This is the default ServiceType.

- NodePort: it exposes the Service on each Node's IP at a static port (the NodePort). A ClusterIP Service, to which the NodePort Service routes, is automatically created. You'll be able to contact the NodePort Service, from outside the cluster, by requesting <NodeIP>:<NodePort>.

- LoadBalancer: it exposes the Service externally using a cloud provider's load balancer. NodePort and ClusterIP Services, to which the external load balancer routes, are automatically created. It uses iptables in nodes to manage IPs of pods.

- ExternalName: it maps the Service to the content of the externalName field (e.g. foo.bar.example.com), by returning a CNAME record.

- Headless.

Pods

Pods are the smallest, most basic deployable objects in Kubernetes.

A Pod represents a single instance of a running process in your cluster.

Pods contain one or more containers, such as Docker containers. Containers in pods share a single IP address and a single namespace (localhost). Optionally, they can share data using Google Cloud storage or disks.

When a Pod runs multiple containers, the containers are managed as a single entity and share the Pod's resources.

Pod Lifecycle

- Pending: pod is created but some containers are not running.

- Running: pod is bound to node and running all containers.

- Succeeded: all containers are terminated successfully.

- Failed: at least one container has terminated failure.

- Unknown: pod cannot be determined.

Deployment

Set of multiple identical pods.

It runs multiple replicas of an application, using Pod templates with specification of pods, and automatically replaces any instance that fails

It defines how High Availability and autoscaling are performed over pods.

It creates and manages pod lifecycle.

Used for rolling updates, changing versions of application running in container.

It makes sure that service is running properly.

Workloads

Containers, whether for applications or batch jobs, are collectively called workloads.

Before you deploy a workload on a GKE cluster, you must first package the workload into a container.

Workload types

- Pods:

- Controllers:

High Availability

Regional Control plane: GKE allows you to replicate Kubernetes control plane to 3 regions, which increases up-time from 99.5% to 99.95%, and provides zero downtime upgrades.

Cluster federation: it is posible also to have a federated control plane proxying several clusters, even on different cloud providers.